bagging machine learning examples

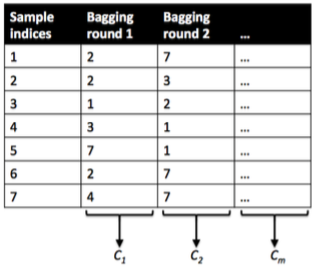

Bagging boosting and stacking. In the above example training set has 7 samples.

What Is The Difference Between Bagging And Boosting Quantdare

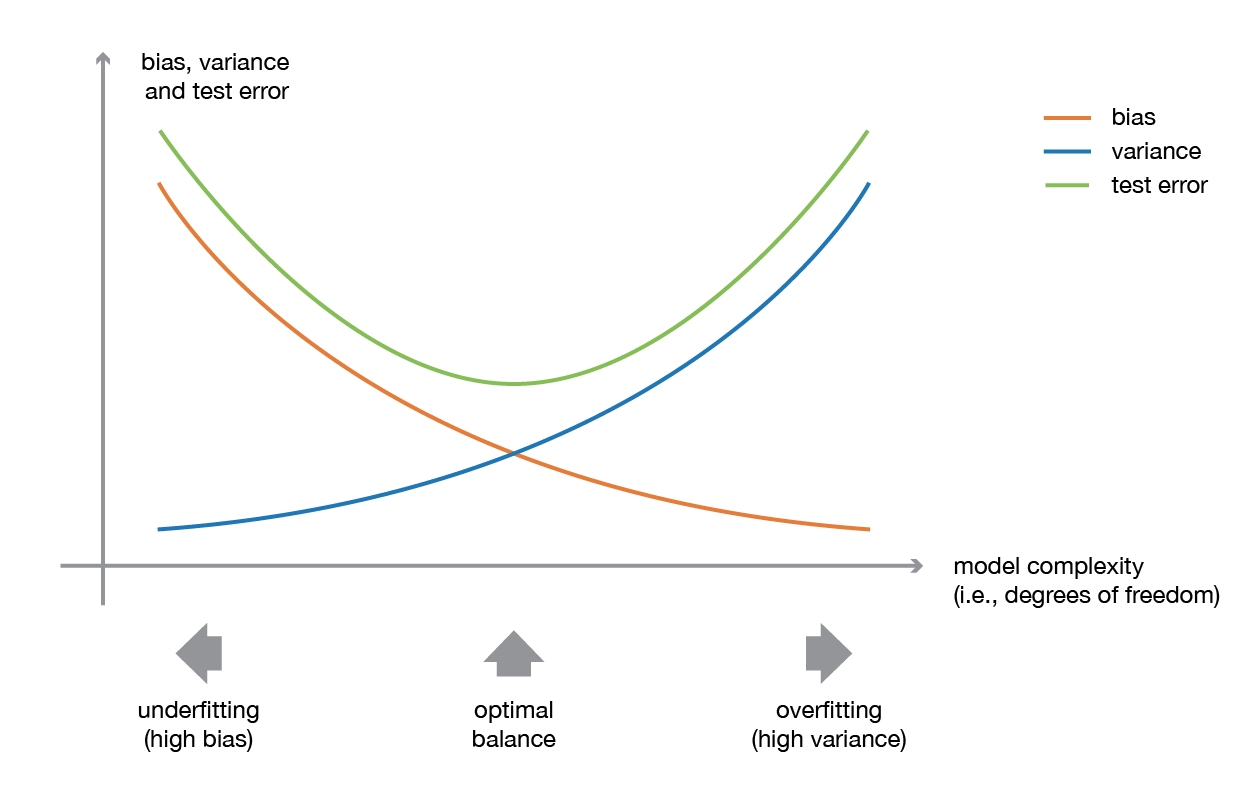

Bagging technique can be an effective approach to reduce the variance of a model to prevent over-fitting and to increase the.

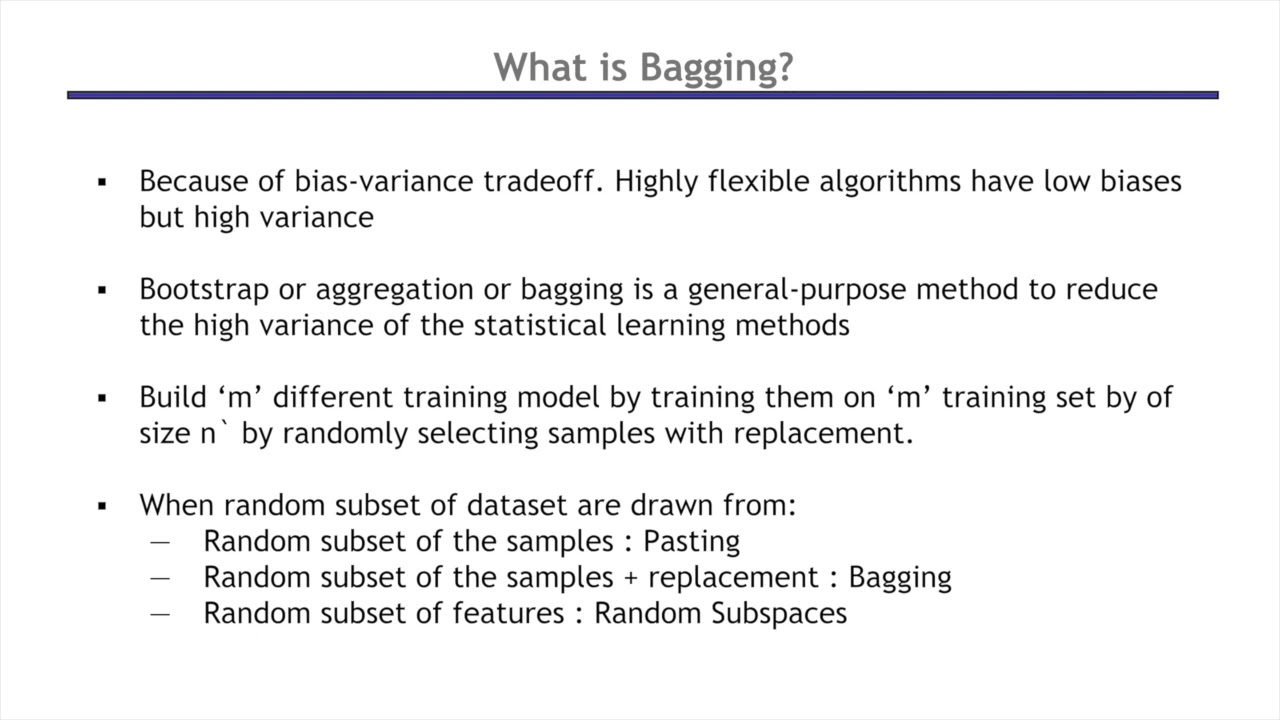

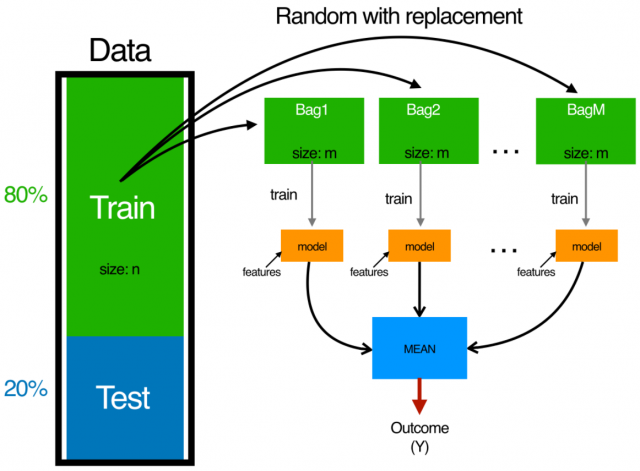

. Bagging is a simple technique that is covered in most introductory machine learning texts. A good example is IBMs Green Horizon Project wherein environmental statistics from varied. Bagging is a type of ensemble machine learning approach that combines the outputs from many learner to improve performance.

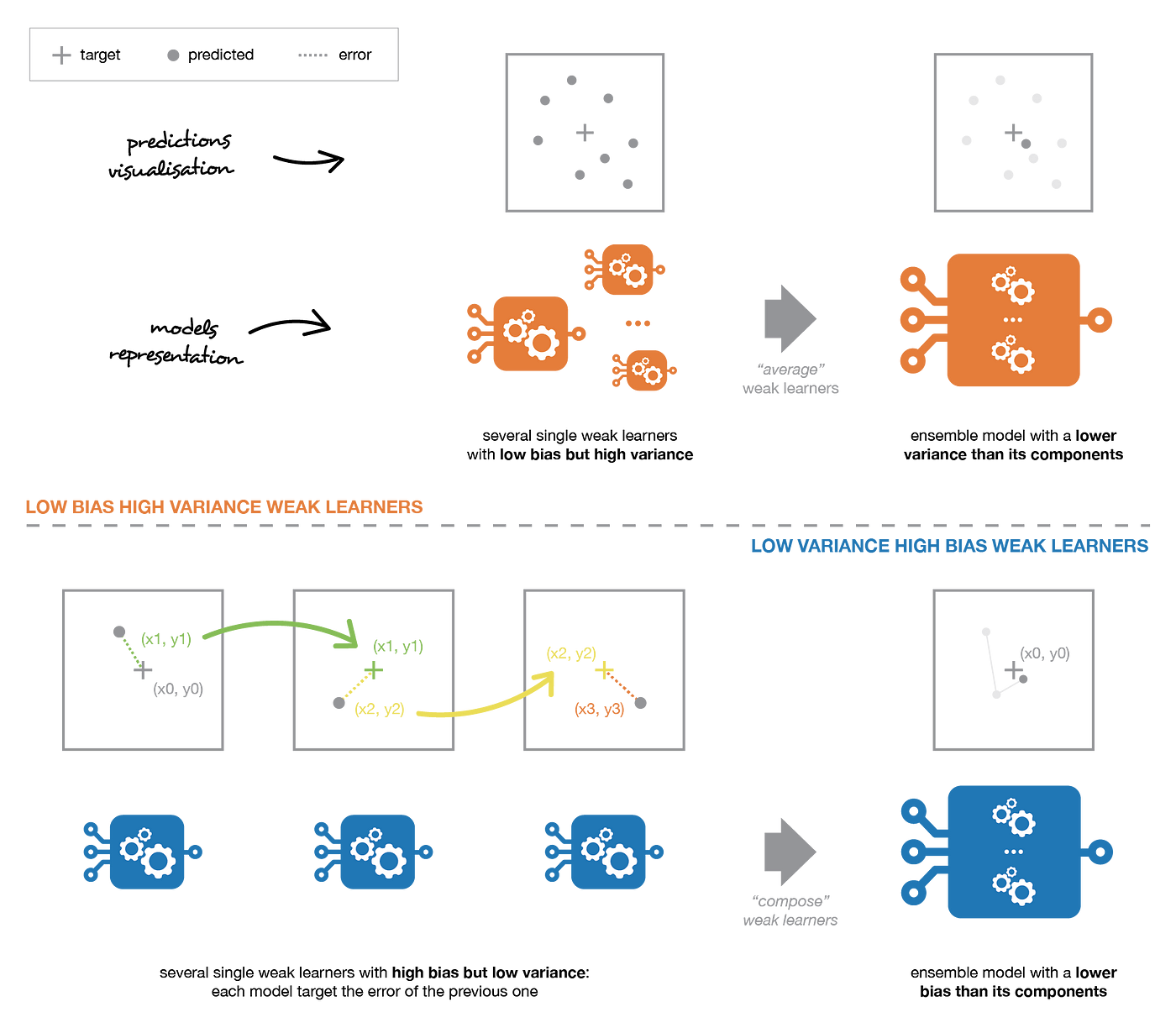

In the first section of this post we will present the notions of weak and strong learners and we will introduce three main ensemble learning methods. Size of the data set for each predictor is 4. If you want to read the original article click here Bagging in Machine Learning Guide.

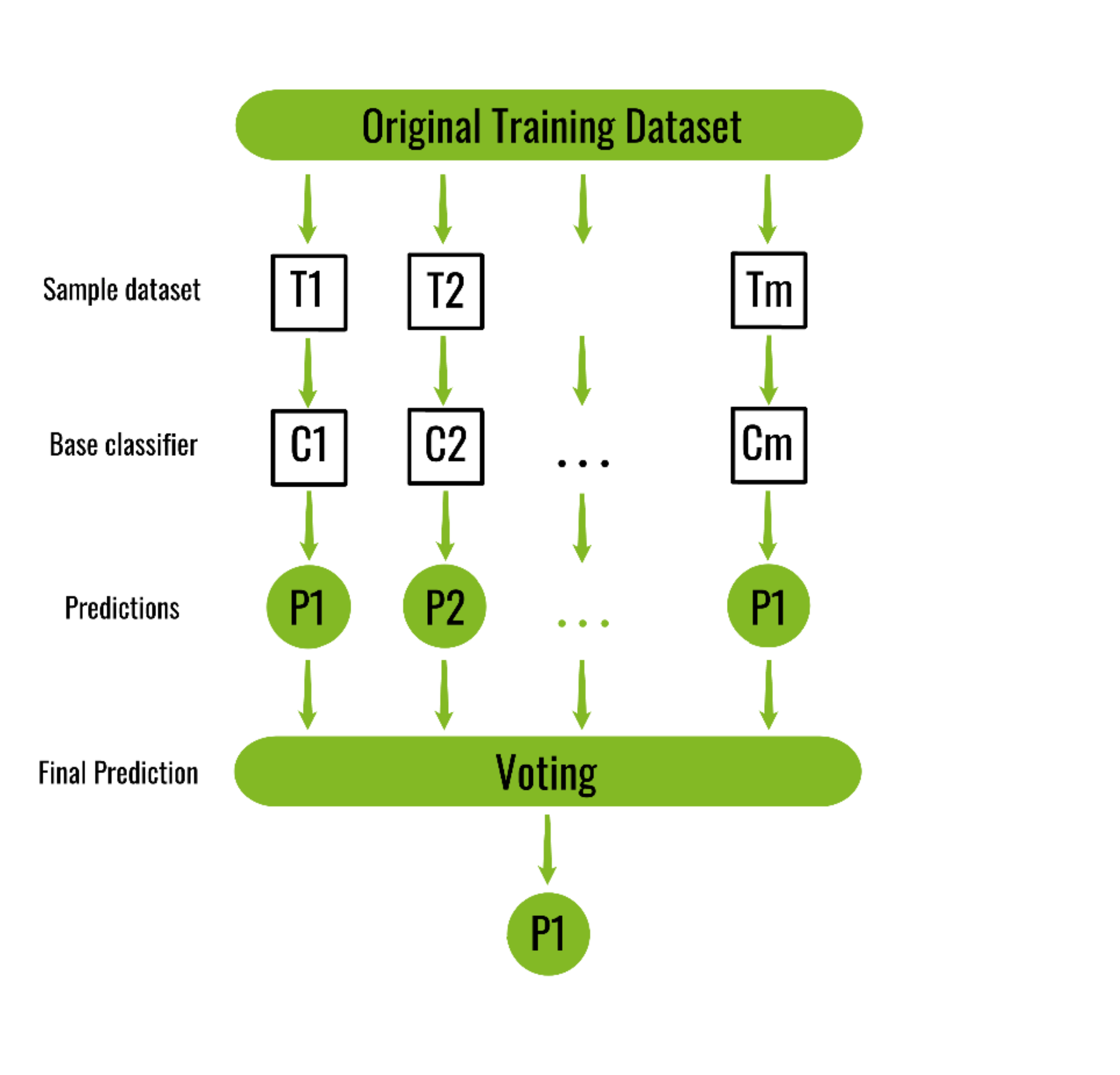

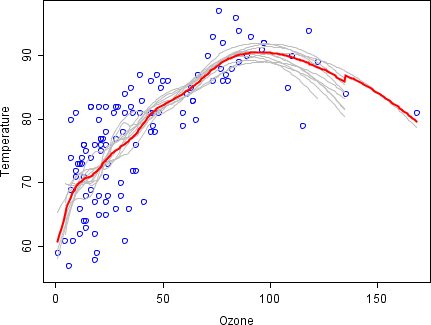

Visual showing how training instances are sampled for a predictor in bagging ensemble learning. Bagging ensembles can be implemented from scratch although this can be challenging for beginners. Difference Between Bagging And Boosting.

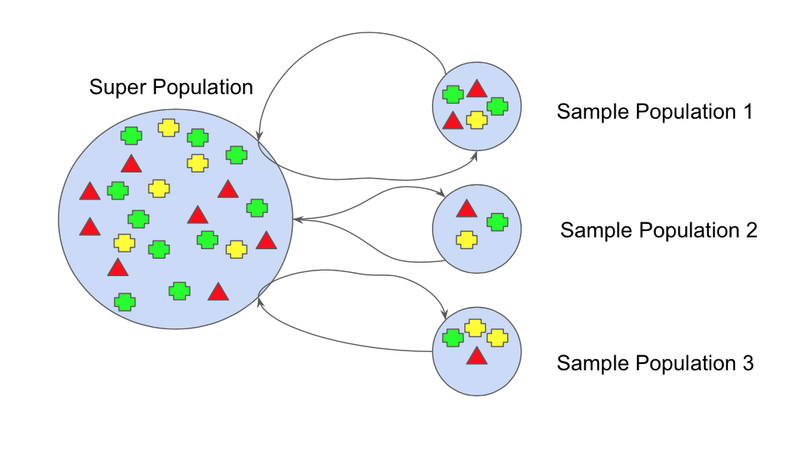

Bagging is a technique used in machine learning that can help create a better model by randomly sampling from the original data. For example we have 1000. How to Implement Bagging From.

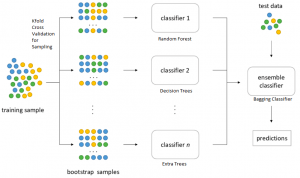

Machine learning algorithms can help in boosting environmental sustainability. Bagging also known as bootstrap aggregation is the ensemble learning method that is commonly used to reduce variance within a noisy dataset. BaggingClassifier base_estimator None n_estimators 10 max_samples 10 max_features 10 bootstrap True.

Bagging aims to improve the accuracy and performance. Bagging in Machine Learning when the link between a group of predictor variables and a response variable is linear we can model the relationship using methods like multiple linear regression. The post Bagging in Machine Learning Guide appeared first on finnstats.

These algorithms function by breaking down the training set into subsets and running them through various machine-learning models after which combining their predictions when they return together to generate an overall prediction for. Random forest is one type of bagging method. For an example see the tutorial.

Then in the second section we will be focused on bagging and we will discuss notions such that bootstrapping bagging and random forests. Bootstrap Aggregation bagging is a ensembling method that attempts to resolve overfitting for classification or regression problems. Two examples of this are boosting and bagging.

In bagging a random sample of.

Ensemble Learning Ensemble Techniques

Ml Bagging Classifier Geeksforgeeks

What Is The Difference Between Bagging And Boosting Quantdare

Bagging Classifier Python Code Example Data Analytics

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

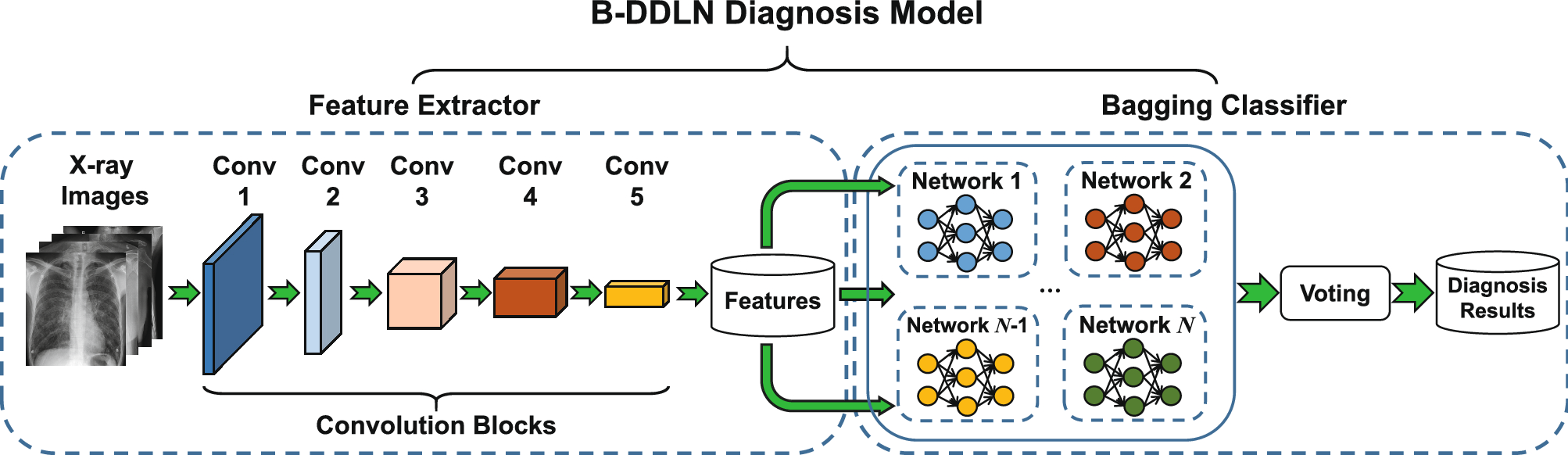

A Bagging Dynamic Deep Learning Network For Diagnosing Covid 19 Scientific Reports

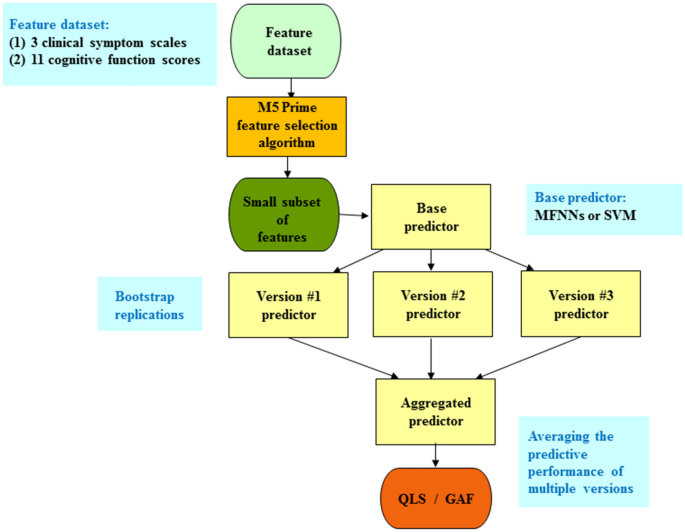

Applying A Bagging Ensemble Machine Learning Approach To Predict Functional Outcome Of Schizophrenia With Clinical Symptoms And Cognitive Functions Scientific Reports

Ensemble Methods Bagging Vs Boosting Difference

Bootstrap Aggregating Wikipedia

Ensemble Methods In Machine Learning Examples Data Analytics

Illustrations Of A Bagging And B Boosting Ensemble Algorithms Download Scientific Diagram

A Bagging Machine Learning Concepts

Ensemble Methods Bagging Boosting And Stacking By Joseph Rocca Towards Data Science

What Is Bagging In Machine Learning And How To Perform Bagging

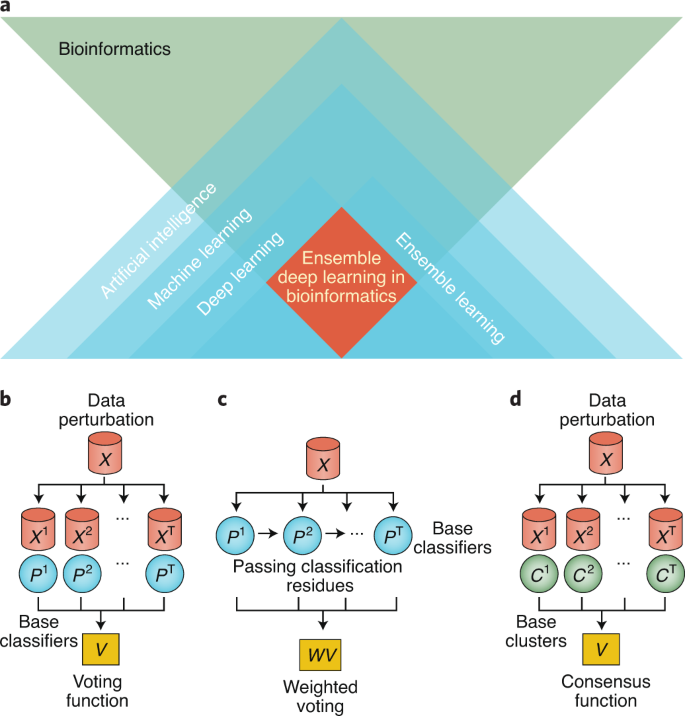

Ensemble Deep Learning In Bioinformatics Nature Machine Intelligence

What Is Boosting In Machine Learning Techtalks

Chapter 3 Bagging Machine Learning Youtube

Bagging Classifier Python Code Example Data Analytics

How Does The Random Forest Model Work How Is It Different From Bagging And Boosting In Ensemble Models